HTC announced the first class of 33 companies to join its Vive X accelerator back in July, but has since remained tight-lipped about what those startups have been up to.

This week, we’ve heard the first update from one of those companies, ObEN. The Pasadena-based group raised $7.7 million in a Series A round of funding to accelerate its work creating realistic and simple avatars for VR and AR. Investors include CrestValue Capital, Cybernaut Westlake Partners, and Leaguer Venture Investment.

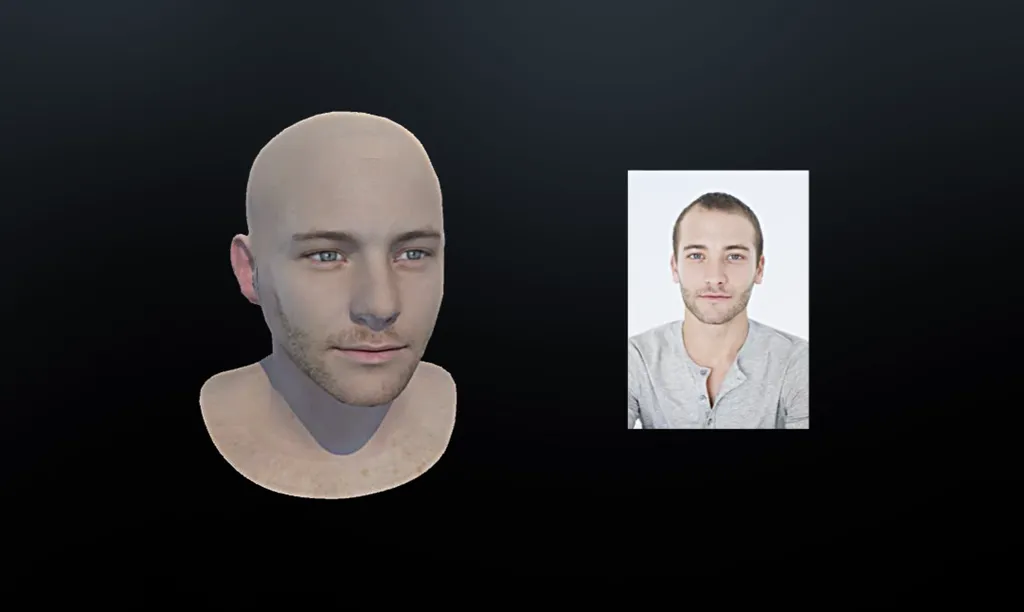

ObEN is currently working on a means of constructing accurate avatars of a human with voice replication based on just one photo of them from a smartphone and a small audio sample. Using proprietary AI technology, the company aims to let you bring these avatars into a variety of VR and AR experiences. A press release makes specific mention of how it surpasses avatars that “depend upon cartoon-like characteristics,” perhaps taking a shot at the avatars seen in Facebook’s social VR experience shown on-stage at Oculus Connect 3 last month.

The video below shows an example of a 3D model created from such an image.

The company expects to launch its tech in Q1 2017. It’s going to be interesting to see how it is implemented into other experiences, especially with Oculus soon launching its own Avatars system, which is again more cartoonish than what ObEN is trying to achieve. The company’s Michael Abrash doesn’t think perfect virtual humans will be here for many years to come, though.

Interestingly, none of the Series A investors mentioned are listed as members of HTC’s VR Venture Capital Alliance (VRVCA), the group of investors that gather once every two months to review pitches from startup VR companies, including those from Vive X. Though unconfirmed, it was thought that ObEN might have been involved in the group’s first meeting back in September. We’ve reached out to HTC to clarify if the VRVCA had any involvement in this funding round.

As part of Vive X, however, ObEN will have had access to mentorship and work space, though it’s currently based in technology incubator, Idealab. The company has a range of openings across computer vision and speech recognition on its official website.