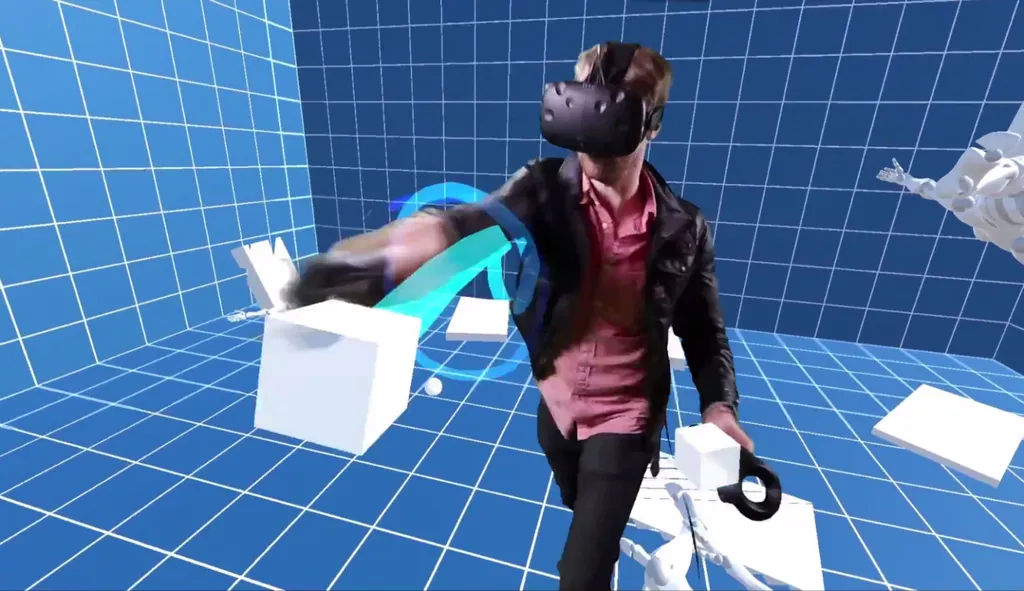

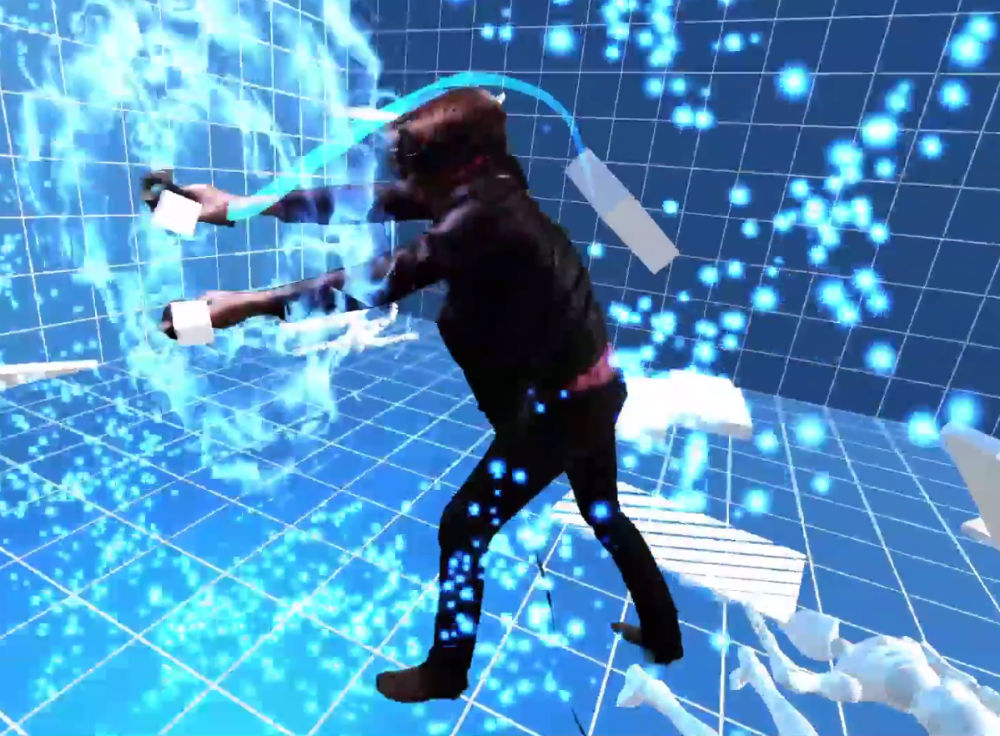

The VR Infinite Gesture plug-in for Unity is intended to help developers integrate gesture recognition technology into their software. Deep down many of us dreamed of wielding some magical energy like the Force in Star Wars or a freeze beam to stop people in their tracks. This software might help people realize those dreams in VR.

The software is made by Tyler Hutchison and Edwon, who declined to provide his full name but owns Edwon Studio based in Culver City. The pair star in a stylized mixed reality trailer shot at the studio showing off the plug-in’s features. Check it out at the top of the post. If any developers try the software, which is available on the Unity Asset Store, please share your impressions in the comments. It sells for around $90 at the time of this writing.

After seeing the trailer making the rounds among VR professionals on Twitter, I reached out to @edwonia to find out more about the project.

After seeing the trailer making the rounds among VR professionals on Twitter, I reached out to @edwonia to find out more about the project.

Solving a pet problem

Edwon is primarily an artist while Hutchison is mainly a programmer, but they do other things. They met up in L.A. at an indie game space called Glitch City and started working together.

“Our long term goal is to make truly interactive characters for VR and AR,” Edwon wrote. “That’s why we made the gesture tech, so that our characters could perceive human intent through body movement. I have a lot of issues with the standard formula for VR characters right now, which boils down to basically choose your own adventure books, or just movies.”

The pair pitch the plug-in as being “smart, so you don’t have to be.” They are also working on a game called Puni involving virtual pets and plan to use the gesture tools “in a pretty unique way.”

“We just kind of assumed that people would want to make that type of game with the tech, you know like use force powers, become a wizard, airbend, etc,” Edwon wrote. “It’s perfect for that type of thing too! But we’re so focused on our game we didn’t have time to make all those ideas so we figured why not let it loose into the wild and see what people make?”

Recognizing gestures with neural nets

The pair claim to use “neural networks” in learning complex gestures. Computer systems modeled on how the human brain works, also known as neural networks, are a buzzword these days, but for good reason.

“[Neural networks] can recognize things in pictures, or make predictions about what movie you want to watch, or transcribe speech,” Edwon wrote. “The challenge for a.i. developers is how to turn your data into something the neural net can understand, and I think we found a pretty good way to do it….every player does a gesture slightly different, but as long as you’ve trained the network with enough examples, it can recognize gestures just as effectively as a human.”

The source code is included with the plug-in if you want to investigate how they did it.

Next steps

They built the tool over several months alongside their game, which is slated for release later this year. Edwon and Hutchison talked about how to track gestures in Puni and they decided neural networks “are perfect for that type of problem because a gesture has a lot of fuzzy data.”

Edwon:

In traditional gesture tracking you would have to manually program in each gesture. Some solutions might use velocity, relative position, etc… Another common solution is comparing a gesture to a pre-made shape and seeing if the user has traced along it. Both these solutions have lots of edge cases that make it extremely difficult to get reliable gesture tracking. They end up being difficult to develop and frustrating for the end user as well.

Especially once you add a 3rd dimension, aka VR motion controllers like Vive and Oculus Touch, it gets extremely difficult. Some games on Vive have already implemented some gesture tracking, but I can’t imagine it was easy for the devs. With that type of code you really have to constrain and simplify the problem down. It limits your creativity.

We wanted to make an extremely flexible solution that could handle any gesture you could imagine. A neural network turned out to be the perfect solution.

There’s documentation on Edwon’s website with details on the plug-in including a roadmap for future updates. Future plans include tracking both hands (it just does one at a time right now) and an option to spread out training “over time to prevent in-game performance slowdown.”