The final demo at a day-long visit to Meta’s research offices in Washington state was easily the most memorable — true telepresence.

Realizing true telepresence in consumer hardware may be one of the hardest ideas to prepare for consumers. It may also be the key to unlock VR’s killer use case. Meta calls its approach to the challenge Codec Avatars and the technology could change the world in ways we can hardly fathom right now.

“I think most people don’t realize what’s coming,” Yaser Sheikh, research director at Meta’s Reality Labs office in Pittsburgh, told UploadVR.

Earlier this year he suggested it was “five miracles away”.

Now?

“I would count three to four research miracles we need to solve before it gets onto the on-board compute on Quest Pro,” Sheikh said.

Codec Avatars aim to make it so that you can feel 1:1 like you’re sharing a room with another person who isn’t actually physically present with you. Meta calls the approach “Codec Avatars” and if those miracles come to pass the concept could transform communications in ways as fundamental as the telephone. It may be the closest we ever come to teleportation and the term “telepresence” takes new meaning placed in context of the most impressive of three Codec Avatar demos presented by Meta.

Codec Avatars 2.0

![]()

In the Codec Avatars 2.0 demo I came face to face with Jason Saragih, a research director for Meta in Pittsburgh. I moved my head to the right and left and his eyes maintained eye contact with me. As I conversed, I moved a light around his face to highlight his skin and expressions from different angles. The whole time I picked up on the same sorts of subtle facial movements I’d been keying in on all day from people sharing the same physical room with me like Mark Zuckerberg, Andrew Bosworth, Michael Abrash, and others.

The difference was that Saragih shared my space virtually. He was physically located in Pittsburgh – over 2,500 miles away by road from where I stood in Washington state. He wore a research prototype VR headset to translate his facial movements and I wore a standard Rift S powered by a PC. We had a cross-continental VR call that, for lack of a better description, crossed the “uncanny valley” in virtual reality. The first call of this fidelity made at Reality Labs’ offices occurred in 2021.

“That’s actually probably the first time anyone has done that in real time for a real person,” Sheikh said. “It felt like what I would imagine Alexander Graham Bell’s moment of speaking with his assistant felt like.”

So what’s the hitch? Why can’t we replace phones immediately with Codec Avatars providing true telepresence? It’s too hard to do the proper scanning. Right now, creating an avatar capturing both the face and body of a person at that fidelity requires several hours in two different ultra-expensive scanning systems followed by at least four weeks of processing time.

Crossing The Uncanny Valley

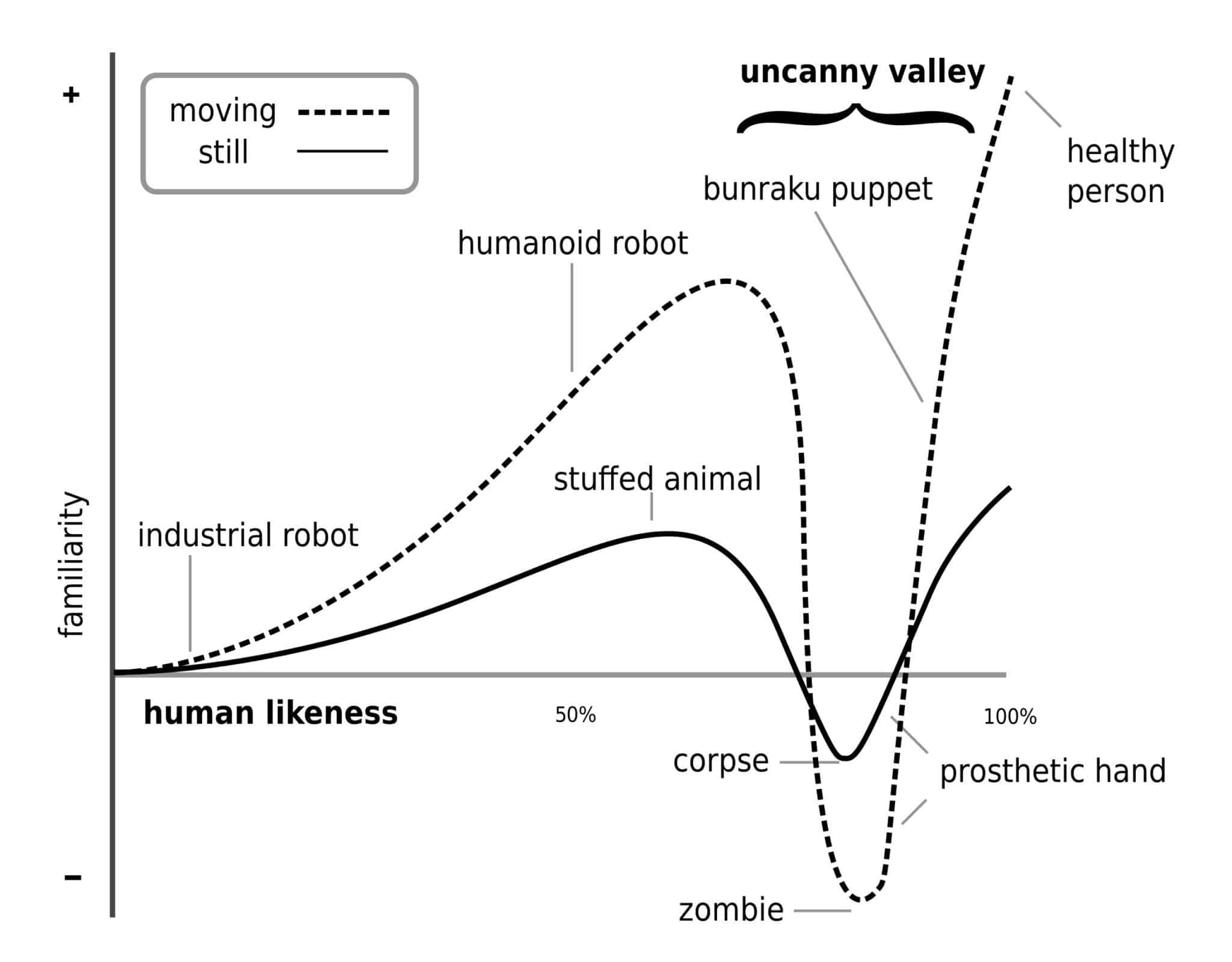

The “uncanny valley” refers to a well-known concept describing the discomfort people feel seeing faces that resemble humans but miss the mark in meaningful ways.

In film, you feel comfortably immersed in the story looking at something like the cute Baymax robot but constantly distracted looking at more human-like faces in heavily motion captured works like the 2004 film The Polar Express. You can chart out the “likeability” or “familiarity” of those faces and as “realism” increases there’s a point at which people simply get freaked out looking at something almost-but-not-quite human. Likeability drops and you’re left with what looks like a valley on the chart. If you can get enough things exactly right about the digital representation you can come up the other side of the valley when people begin to accept the likenesses again. Arguably, the 2009 James Cameron movie Avatar is the highest profile example of crossing that chasm in movies for extended periods of time as it presented human-like digitized characters which no longer distracted most viewers.

Doing the same thing in real-time with a live human in stereoscopic virtual reality is a much harder task. Failing to meet this standard is the reason so many people critizize the avatar Zuckerberg showed from Horizon Worlds earlier this year. And it’s also the reason most real-time avatars from major companies are closer to babies than photorealistic representations – cartoon babies sit more comfortably on the left side of the valley. An article from The Information earlier this year, for example, referred to a 2019 demo of avatars at Apple from the teams developing its VR headset tech that still fell into the uncanny valley.

And indeed, two other demos provided by Meta on either side of the live cross-continental call made clear just how far there is to go.

The Sociopticon & 3 Miracles

On either side of the most impressive avatar research demo Meta ever presented to press were two more that underscored the incredible challenge ahead.

To the right, Meta showed an avatar scanned from a cell phone. It required a 10 minute phone scan and a few hours of processing time. Meta presented the same concept at SIGGRAPH 2022 recently and, while impressive in its own way, the fidelity of the avatar fell deeply into the uncanny valley.

To the left, Meta showed a full-body avatar captured in what the company calls the “Sociopticon”. It contains 220 high-resolution cameras requiring hours of scanning and days of processing to turn out one hyper-realistic scanned Codec Avatar. I could change the clothes on this avatar at any moment as the pre-recorded person performed the same movements over-and-over. As he jumped I could walk around the avatar and see each new set of clothing believably bunch up and drape around his body exactly as I would expect from physical clothing.

Sheikh said the three demos represent the “miracles” required to transform communications in the 21st century the way the telephone changed the 20th century.

“It’s actually the confluence of these three is that is what we need to solve,” he said. “You need to make it as easy as scanning yourself with a phone. It has to be of the quality of the Codec Avatar’s 2.0 and it needs to have the full body as well.”

If there’s a fourth “miracle”, then, it is to drive the hyper-realistic scanned Codec Avatar from the sensors and processors embedded in a VR headset that can be mass produced and priced into the range of buyers.

“There’s a lot of stuff to work out,” Meta’s top researcher Michael Abrash told UploadVR. “But I say the real miracle is how do you scale it?”

Authenticity & Trust

Meta researchers said they would not allow anyone to drive someone else’s Codec Avatar. This was alluded to in 2019 with some of the first reveals of this technology publicly and reiterated during the recent SIGGRAPH demo of the phone-scanned version of the avatars.

“Internally all of us view the fact that authenticity – the fact that you are able to trust that when you are here in VR or AR you are who you are – the other source has trust,” Sheikh said. “That’s kind of like an existential requirement of this technology.”

The same way Apple’s Face ID scans your face and headsets like HoloLens 2 or Magic Leap 2 authenticate users with their iris – Meta’s own headsets may one day do similar in order to ensure the person wearing the headset is the one projecting their specific scanned Codec Avatar into the “Metaverse”.

“What this thing will allow us to do is share spaces with people,” Sheikh said. “Video conferencing let us survive the pandemic, but we all understood at the end of it what was missing…it was the feeling of sharing space with one another.”