Working on VR audio technology I often hear, “you mean 3D audio?” or “how is it different from existing 3D audio technology?”. If VR audio is defined as creating and delivering audio signals that are suited for VR content, then the term 3D audio does not fully reflect the capabilities of the audio in a virtual environment.

Listening to interactive, three dimensional audio in VR

Having the the x, y and z axis information is what makes the sound three-dimensional. Traditional ‘surround sound’ or ‘3D audio’ lacks the height information. The 5.1 channel systems, for example, are 2D audio with speakers placed at the height of human ears, which covers front, back, left and right.

True 3D audio became possible in VR with the adoption of binaural rendering technology. Binaural rendering replicates what we hear in the real by transforming three dimensional audio signals into stereo outputs. This allows you to hear true 3D sounds, even through regular headphones. VR audio can immerse users in the scene more than ever before because of one important factor – user interaction.

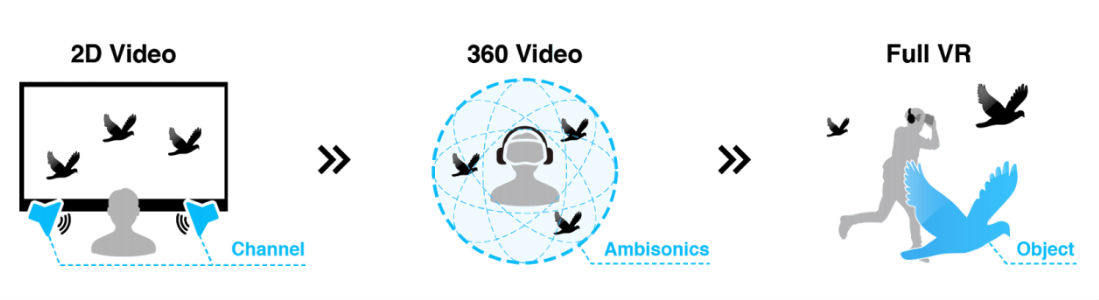

Previously when there was no user interaction in the story, video and audio were pre-rendered on the production side to a fixed flat screen and a fixed loudspeaker layout. This 2D content was simply played back on the end-user side. On the other hand, user’s head orientation and interaction constantly change in VR, so everything has to be configured on the end-user side. Sound cannot be pre-rendered during VR production because it has to change according to a listener’s viewpoint or movement in real time. Performing real-time binaural rendering on the end-user mobile device is not an easy task.

Binaural rendering by nature requires high computational power to calculate how a sound travels from a sound source position to a listener’s ears, using complex functions. Most mobile bandwidth is used to process video. This is why effective, high-quality binaural rendering technology that alleviates the burden on the mobile device is key in the VR audio industry.

Where VR Audio is At

Overwhelming immersiveness is what completes the ‘being there’ experience in VR. In order to enhance audio immersiveness, efforts were made to increase the audio signal resolution on both the ‘time’ and ‘spatial’ domain. Since resolution in the time domain (frequency) has already exceeded the level that humans can physically recognize, spatial domain is where further developments are needed.

Before the age of VR, the 2D video story was not influenced by end-user’s interaction. The spatial resolution for audio improved just by adding more speakers around the end-user’s frontal rectangular screen. The biggest hurdle for immersion was instead ‘present room effect.’ One could never fully be there in the story because the virtual world was limited by the screen size. This is now a different story because the presence of the real world is blocked by wearing HMD and headphones. VR certainly helps the content consumer be completely transported to a different world, but again it delivers different levels of immersiveness depending on type of content.

In 360 video or 3DOF type content, the world is already pre-rendered. The three dimensional space is projected in a spherical world that you can look from different directions upon free will, but cannot walk around. Your position remains fixed in one spot. This is why an Ambisonics audio signal, a way of recording and reproducing 3D sound as a snapshot, became such a popular audio format for 360 videos. Just like the 360 video, this spherical audio format can be easily rotated to reflect head orientation yaw, pitch, and roll. However, Ambisonics is limited to 360 type content only, where the end-user is fixed at one position. Increasing the order of Ambisonics does not support greater interactivity or 6DOF, but merely increases the spatial resolution. Think of it as how increasing the pixel resolution doesn’t transform 360 video into walkable video.

Meanwhile, full VR or 6DOF content is rendered in real time while the user interacts and moves around in the scene. This requires the objects in the scene to be controlled individually, rather than as a chunk of pre-configured video and audio. When each sound source is delivered to the playback side as an individual object signal, it can truly reflect both the environment and the way the user is interacting within the environment. This full control capability of object-based audio may be used in 2D or 360 video, but it’s potential is best realized in full VR.

VR Audio Moving Forward

While more and more VR content is being made in the full VR format, the audio industry is barely catching up with Ambisonics signals for 360 videos. Second order Ambisonics already requires a minimum of 9 channels, and higher order Ambisonics are not feasible in many cases because the network bandwidth is limited in mobile, not to mention the restrained processing power allocated for audio.

Some might argue personalized audio is the most important challenge going forward. Until capturing the exact anthropometric information requires quite a bit less resources than now, customization for each person’s ear shape and head size will remain as the last step to perfection. Luckily, four out of five people can already feel immersed in the VR scene with general binaural rendering process. What needs to be figured out in the foreseeable future is how to deliver interactive 3D audio without compromising the content quality, from creators to consumers and across multiple platforms. Once best practices are determined and a recommended workflow is set, standardizing those practices should follow to improve interoperability.

Brooklyn Earick is a music producer, engineer and entrepreneur. He is currently working as Director of Business Development for G’Audio Lab in Los Angeles.