Facebook’s AR/VR research division is developing a non-invasive brain scanning technology as a potential input device for its future consumer AR glasses.

While Facebook hasn’t yet announced AR glasses as a specific product, the company has confirmed it is developing them. A Business Insider report early this year quoted a source as stating that it “resembled traditional glasses”.

In today’s blog post, Facebook describes its end goal as “the ultimate form factor of a pair of stylish, augmented reality (AR) glasses.”

A major challenge in shipping consumer AR glasses however is the input method. A traditional controller, such as those used with many VR devices, would not be practical for glasses you want to wear out and about on the street. Similarly, while voice recognition is now a mature technology, people tend to not want to give potentially private commands out loud in front of strangers.

A brain computer interface (BCI) could allow users to control their glasses, and even type words and sentences, by just thinking.

“A decade from now, the ability to type directly from our brains may be accepted as a given,” states the blog post. “Not long ago, it sounded like science fiction. Now, it feels within plausible reach.”

Invasive Is Not An Option

Almost all high-quality BCI options today are invasive, meaning they place electrodes against the brain which require surgery for insertion. Elon Musk’s startup Neuralink is planning to use a robot to insert tiny “threads” into the brain, but this is obviously still impractical for a mass market product.

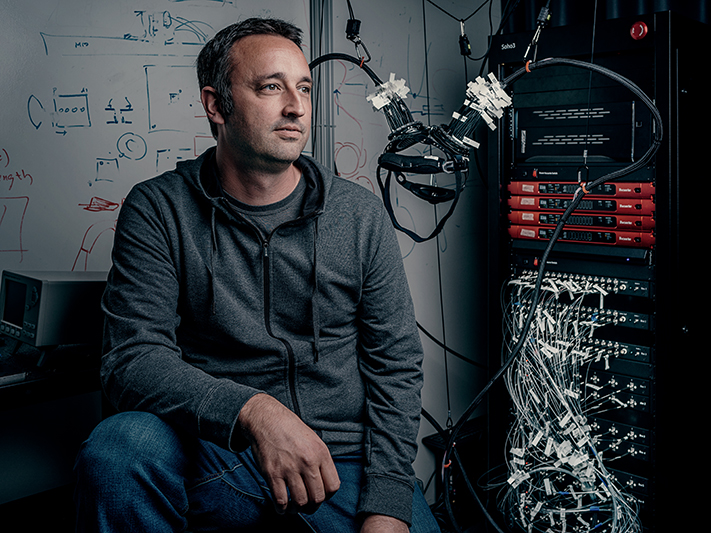

Facebook’s BCI program is directed by Mark Chevillet. Chevillet holds a PhD in Neuroscience and was previously a professor and program manager at Johns Hopkins University’s neuroscience department. There he worked on a project to build a communications device for people who could not speak.

Invasive Proof Of Concept

Before figuring out how to read thoughts non-invasively, Chevillet needed to figure out whether it was possible in the first place. He reached out to Edward Chang, a colleague and neurosurgeon at the University of California, San Francisco (UCSF).

To prove that the concept is possible, the UCSF researchers used invasive ECoG (electrocorticography) and were able to get up to 76% accuracy in detecting utterances the subjects were thinking of.

Previous research projects have achieved this with offline processing, but this result was achieved in real time. The system can currently only detect a limited vocabulary of words and phrases, but the researchers are working to improve that.

Near Infrared Imaging

To achieve similar results without brain implants, new technologies and breakthroughs will be required. Facebook has partnered with Washington University and Johns Hopkins to research near-infrared light imaging.

If you’ve ever shined a red light against your finger you’ll notice that the light passes through. The researchers are using this concept to sense “shifts in oxygen levels within the brain” caused by neurons consuming oxygen when active- an indirect measure of brain activity.

This is similar to the techniques used by Mary Lou Jepsen’s startup Openwater. Jepsen worked at Facebook in 2015 as an executive for Oculus, researching advanced technologies for AR and VR. While Openwater’s goal is to replace MRI and CT scanners, Facebook is clear that it has no interest in developing medical devices.

The current prototype is described as “bulky, slow, and unreliable”, but Facebook hopes that if it can one day recognize even a handful of phrases like “home” “select” and “delete” it could, combined with other technologies like eye tracking, be a compelling input solution for its future AR glasses.

Direct Cellular Imaging

If near infrared imaging of blood oxygenation isn’t sufficient, Facebook is looking into direct imaging of blood vessels and even neurons:

“Thanks to the commercialization of optical technologies for smartphones and LiDAR, we think we can create small, convenient BCI devices that will let us measure neural signals closer to those we currently record with implanted electrodes — and maybe even decode silent speech one day.

It could take a decade, but we think we can close the gap.”

Privacy & Responsibility

Of course, the idea of Facebook literally reading your brain may bring major privacy concerns to mind. Such data could be used for targeted advertising with unprecedented fidelity, or more nefarious purposes.

“We’ve already learned a lot,” says Chevillet. “For example, early in the program, we asked our collaborators to share some de-identified electrode recordings from epilepsy patients with us so we could validate how their software worked. While this is very common in the research community and is now required by some journals, as an added layer of protection, we no longer have electrode data delivered to us at all.”

He goes on:

“We can’t anticipate or solve all of the ethical issues associated with this technology on our own,” Chevillet says. “What we can do is recognize when the technology has advanced beyond what people know is possible, and make sure that information is delivered back to the community. Neuroethical design is one of our program’s key pillars — we want to be transparent about what we’re working on so that people can tell us their concerns about this technology.”