Current VR headsets can certainly make you believe you’re in a virtual world, but consumer devices can’t yet reproduce the exact way your eyes see the real world. New research being presented at SIGGRAPH by NVIDIA aims to fix that.

NVIDIA Research is demonstrating two ways of addressing the vergence-accommodation conflict. For those unfamiliar, our eyes have a highly evolved way of bringing objects at different distances into crystal clarity that involve both pointing together (vergence) and focusing the lenses (accommodation). Current VR headsets tend to use glass lenses that make your eyes focus at a fixed distance so they are relatively relaxed, but as you look at objects at different simulated distances in a current VR headset your eyes might be straining more since these two features of your eyes are in conflict.

Companies like Magic Leap are allegedly working on technology that perfectly produces the way your eyes see the real world, but that technology hasn’t arrived for consumers yet. Meanwhile, researchers presenting at conferences like SIGGRAPH each year present new work that might move these advances closer to financial feasibility for the mass market.

That’s what’s on the way from NVIDIA this year. According to a blog post:

Varifocal Virtuality, is a new optical layout for near-eye display. It uses a new transparent holographic back-projection screen to display virtual images that blend seamlessly with the real world. This use of holograms could lead to VR and AR displays that are radically thinner and lighter than today’s headsets.

This demonstration makes use of new research from UC Berkeley’s Banks lab, led by Martin Banks, which offers evidence to support the idea that the our brains use what a photographer would call a chromatic aberration — causing colored fringes to appear on the edges of an object — to help understand where an image is in space.

Our demonstration shows how to take advantage of this effect to better orient a user. Virtual objects at different distances, which should not be in focus, are rendered with a sophisticated simulated defocus blur that accounts for the internal optics of the eye.

So when a user is looking at a distant object it will be in focus. A nearby object they are not looking at will be more blurry just as it is in the real world. When the user looks at the nearby object, the situation is reversed.

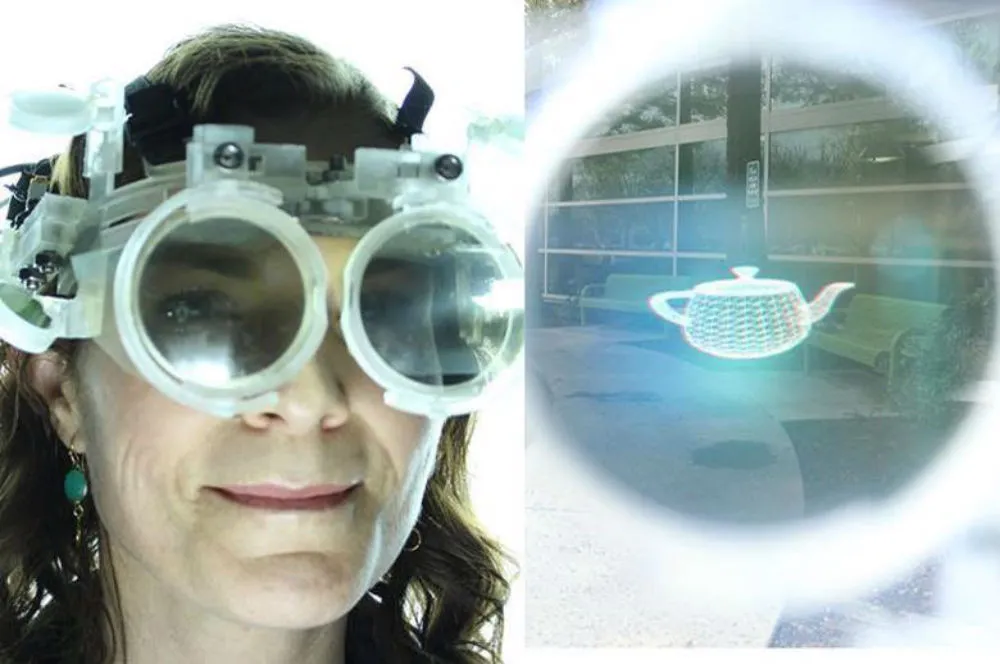

The second demonstration, Membrane VR, a collaboration between University of North Carolina, NVIDIA, Saarland University, and the Max-Planck Institutes, uses a deformable membrane mirror for each eye that, in a commercial system, could be adjusted based on where a gaze tracker detects a user is looking.

The effort, led by David Dunn, a doctoral student at UNC, who is also an NVIDIA intern, allows a user to focus on real-world objects that are nearby, or far away, while also being able to see virtual objects clearly.

NVIDIA is also showing new haptic research that could point toward more immersive touch sensations in VR. Research from Cornell University in collaboration with NVIDIA includes two controllers, one that relays “a sense of texture and changing geometry” while the other “changes its shape and feel as you use it.”